Website performance optimization or simply, website optimization is a process of improving a website’s loading speed in the browser. It generally involves editing the website to optimize scripts, HTML, or CSS code and reducing the number of web page components like images, scripts, or video for faster loading.

What is web performance?

Web performance is the speed in which web pages are loaded and displayed on the user’s web browser.

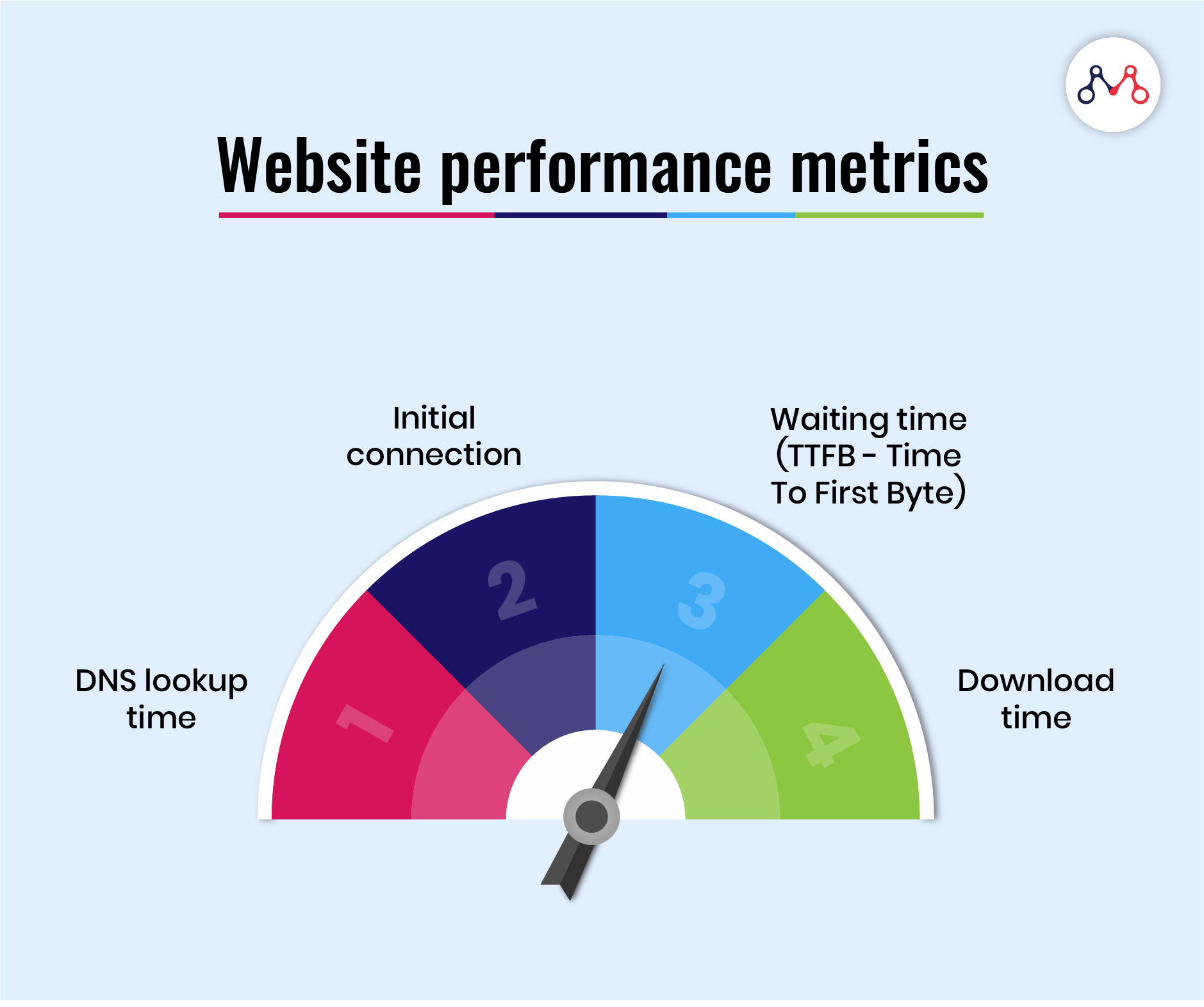

The following are the website performance metrics-

#1 DNS lookup time

The Domain Name System (DNS) is the phonebook of the Internet. Users access online information through domain names, like www.mantralabsglobal.com. Web browsers interact through Internet Protocol (IP) addresses. DNS translates domain names to IP addresses so that browsers can load Internet resources.

#2 Initial connection

It is the time for a handshake between the browser and the server to retrieve the contents of the page. Handshaking is a process by which two devices initiate communications (here- browser and server). It initiates with the browser sending a message to the server indicating that it wants to establish a connection.

#3 Waiting time (TTFB)

It is the time spent waiting for the initial response, also known as the Time To First Byte. This time captures the latency (the delay between the instruction and data transfer) of a round trip to the server. It also accounts the time spent waiting for the server’s response.

#4 Download Time

It is the time spent receiving the response data.

11 Proven website performance optimization techniques

You’ll need to consider the following to enhance a website’s performance.

#1 Reduce DNS lookup time

Implement the following to reduce DNS lookup time-

- Reduce the number of hostnames, that are used to generate a web page.

- Host third party resources locally, which automatically reduces the DNS lookup.

- Use DNS Cache, where cache time can be defined to different types of hosts, so it reduces the lookup time.

- DNS prefetching: allows browsers to perform DNS lookup in the background while the user browses the current page.

- Defer parsing Javascripts, which are not needed while loading a web page but render blockers.

- Use a fast DNS provider: choose the DNS providers whose lookup time is minimal.

#2 Browser/Web cache

It is a temporary storage location on a computer for files that a browser downloads to display websites. Locally cached files may include any documents from a website, such as HTML files, CSS style sheets, JavaScript scripts, graphic images, and other multimedia content. When a user revisits the website, the browser checks for the updated content and downloads only those files or what is not already present in the cache. This reduces bandwidth usage on both the user and server-side and loads the page faster.

#3 Image Optimization

It is a process of delivering high-quality images in the right format, dimension, size, and resolution while keeping the smallest possible size. There are different ways to optimize images. You can resize, cache, or compress the image size.

#4 HTML, CSS, and JS Minification

While moving the source of website production, minify the contents of source code (Uglify), to reduce the overall size of the page. It will enhance the download speed for the page content on the web browser.

#5 HTML hierarchy

Maintain the standard HTML hierarchy, which means- push all the render-blocking scripts to the bottom of the page and keep only required assets on the header part of the load content. This way, the user doesn’t have to wait to see the actual page because of render-blocking scripts.

#6 Use Sprites

Sprite images are the group of images, which are combined to create a single image for a web page. As the number of server requests affects the bandwidth and loses the page speed score, it is better to combine all the possible images into sprite images.

#7 Enable compression

The web standards suggest GZIP compression. It is effective for optimum bandwidth utilization while rendering the contents. Let’s say- the overall size of the assets is 900KB. Enabling GZIP compression can compress the content size to at least 600KB. This enhances the bandwidth and pages render at a faster rate.

#8 Use secure channels/protocols

Prefer using secured channels to load the web page contents. It prevents the malware intro into the page.

#9 Reduce the number of redirections

Use a very less number of redirections in the websites. The introduction of too many redirections will consume the DNS lookup time and affect the page load time.

#10 Use CDN

Use CDN paths for the static resources, which enhances the load time performance of the website. CDN is useful for pre-caching static resources, which helps in reducing the time-to-index and hence reduces the load time. Also, distributed data centers host CDNs. Therefore, the nearest CDN host will fetch the assets- boosting the performance of the website.

#11 Avoid hotlinking

Hotlinking is the process of directly using the content from another site into the source website. Avoiding this will affect the bandwidth of both sites.

Also read – Everything you need to know about Test Automation as a Service.

Do you have any questions regarding your website performance? Feel free to comment or write to us at hello@mantralabsglobal.com & stay tuned for our next article on 8 Factors that Affect Page Load Time & Website Optimization Strategies.

Knowledge thats worth delivered in your inbox