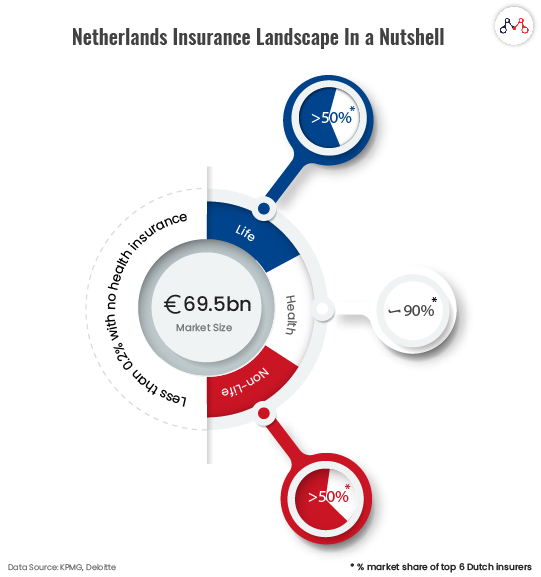

‘What more could people want’ in a nation that already ranks highest in terms of press and economic freedom, human development, quality of life, and happiness? On another note, insurance companies and the government must have been doing something right — over 99.8% of the Dutch population is insured!

This might portray the Netherlands as a saturated market for insurance. However, while the overall Dutch populace has health insurance, there’s still scope for life, non-life and better health insurance products.

The following infographic on Netherlands’ Insurance landscape can shed some perspective.

Insurance Challenges in the Netherlands

KPMG reports, 65% of CIOs (Chief Insurance Officers) agree that the shortage of skills is preventing them from matching the pace of change. [The skills shortage here corresponds to big data, analytics, AI, enterprise and technical architecture and DevOps]

Privacy-Technology paradox is one of the main reasons for the gap between insurance products and personalization. Strict European privacy regulations create a barrier for advanced technologies that relies on data.

Insurance is on the Tech-Radar

The Dutch insurance companies are not only thriving to match the pace of change but also inclined towards investing in futuristic technology. Many of these technologies can be collectively called Artificial Intelligence. But, the impact of individual technologies and how the insurance sector is deploying them is what matters.

Current Technology Trends in Insurance in the Netherlands

Microservices

Microservices breaks down large insurance schemes to their simplest core functions. Organizations treat every microservice as a single service with its API (Application Program Interface).

Insurers in the Netherlands concur that getting into microservices architecture early can bring a bigger competitive advantage to them. Microservices in travel and vehicle insurance promises to be a great prospect in the Netherlands.

Blockchain

Blockchain corresponds to smart contracts in a distributed environment.

You might also like to read about how distributed ledgers can revamp insurance workflows.

The insurance industry is already using distributed ledgers for insuring flight delays, lost baggage claims, and is expanding to shipping, health insurance, and consumer durables domains.

Edge Computing

Edge computing brings computation and data storage closer to the consumer’s location. It improves response time and at times can take real-time actions. Autonomous vehicles, home automation, smart cities, etc. are the sectors that deploy edge computing effectively.

Insured assets with edge computing capabilities help insurers offer better deals and customized policies.

Cognitive Expert Advisors

Augmenting customer service units with AI-powered bots and AI-assisted human advisors add to the superior customer experience. The cognitive expert advisor is a combination of both.

Cognitive experts use advanced analytics, natural language processing, decision-making algorithms, and machine learning. This technology breaks the prevailing trade-offs between speed, cost, and quality in delivering insurance policies and products.

Fraud Analytics

It involves social network analytics, big data analytics, and social customer relationship management for rating claims, improving transparency, and identifying frauds.

AXA insurance has been using fraud analytics in its product OYAK to integrate all customer-related data into a coordinated corporate vision. The technology has enabled AXA to link two slightly records from the same customer preventing fraudulent instances.

AI-based Underwriting

AI-driven unmanned aerial vehicles, also known as drones can examine sites, which are otherwise extreme for humans to visit.

Using such technologies for geological surveys makes the underwriting process more accurate. Insurers are aligning their risk management strategies with AI-based underwriting.

Join our Webinar — AI for Data-driven Insurers: Challenges, Opportunities & the Way Forward hosted by our CEO, Parag Sharma as he addresses Insurance business leaders and decision-makers on April 14, 2020.

Machine Learning (ML)

ML relies on data patterns and is capable of performing tasks without external instructions. In this system, the computer listens to the customer’s data, learns from it, and begins to automatically handle similar instances.

InsurTech is leveraging machine learning to quote optimal prices and manage claims effectively. It is a cost-effective technology that works on different sets of user-persona.

Predictive Analytics

Predictive analytics studies current and historical facts to make predictions about future or otherwise unknown events.

Leading insurers in the Netherlands are using predictive analytics for controlling risks in underwriting, claims, marketing, and developing personalized products.

Predictive Analytics in Insurance Use Case: Zurich

Switzerland’s largest insurer- Zurich uses predictive analytics to identify risks that their customers are ‘actually’ going to face. Predictive analytics incorporates machine learning to anticipate events beyond statistics and probability.

The open-source machine learning model brings the organization the following benefits.

- Zurich is capable of scaling analytics across the larger volumes of data generated through smart devices.

- There’s a flexibility to introduce new data sources and features and test against them in real-time.

- Data scientists can mix-and-match tools to experiment and curate different data sets.

Predictive analytics is Zurich’s key differentiator enabling it to move with the speed of the fastest product in the market.

For AI-based solutions, customer experience and deep-tech consulting, drop us a ‘hi’ at hello@mantralabsglobal.com.

Future Technology Trends That Have Potential to Disrupt Insurance Industry

“You’ll need other skills now. I tell my colleagues: go out, attend seminars, what closely when doing groceries. Because you can learn from a customer-centric view at any moment.”

Wim Hekstra, CEO, Aegon Wholesale

Brain-Computer Interface (BCI)

BCI allows computers to interpret the user’s distinct brain patterns. At present researchers are focusing on using BCI for the treatment of neurodegenerative disorders. This can change medical-underwriting schemes.

Human Augmentation

It refers to creating cognitive and physical improvements integral to the human body. The present-day insurance policies cover human and assets. The future calls for insurance for superhumans.

Smart Dust

It is a system of many tiny micro-electromechanical systems (MEMS). Smart dust includes a microscopic cluster of sensors, robots, cameras, etc. to identify changes in light, temperature, etc. This can help the insurance industry by triggering information against events, which are susceptible to changes.

The future brings enormous opportunities for insurers with Augmentation, AI, and Machine Learning. The insurers’ intent towards accuracy, cost-optimization, and personalized products is the driving force to experiment with technology.

Knowledge thats worth delivered in your inbox