Microinsurance targets low-income households and individuals with little savings. Low premium, low caps, and low coverage limits are the characteristics of microinsurance plans. These are designed for risk-proofing the assets otherwise not served by traditional insurance schemes.

Because microinsurance comprises of low-premium models, it demands lower operational cost. This article covers insights on how AI can help bridge customer gaps for microinsurers.

Challenges in Distributing Microinsurance Policies

Globally, microinsurance penetration is just around 2-3% of the potential market size. Following are the challenges that companies providing microinsurance policies face-

- Being a forerunner in a competitive landscape.

- Making policies accessible through online channels.

- Developing user-friendly interfaces understandable to a layman.

- Improving the organization’s operational efficiencies by automating repetitive processes.

- Responsive support system for both agent and customer queries.

- Quick and easy reimbursements and claim settlements.

Fortunately, technology is capable of solving customer support, repetitive workflow, and scalability challenges to a great extent. The subsequent section measures the benefits of AI-based technology in the microinsurance sector.

Benefits of Technology Penetrating the Microinsurance Space

#1 Speeds up the Process

Paperwork, handling numerous documents, data entry, etc. are current tedious tasks. AI-driven technologies like intelligent document processing systems can help simplify the insurance documentation and retrieval process.

For example, Gramcover, an Indian startup in the microinsurance sector uses direct-document uploading and processing for faster insurance distribution in the rural sector.

#2 Scalable and Cost-effective

Because of scalability, technology has also enabled non-insurance companies to distribute insurance schemes on a disruptive scale.

Within a year of launching the in-trip insurance initiative, cab-hailing service — Ola, is able to issue 2 crore in-trip policies per month. The policy offers risk coverage against baggage loss, financial emergencies, medical expenses, and missed flights due to driver cancellations/ uncontrollable delays.

AI-based systems are also cost-effective in the long run because the same system is adaptable across different platforms and is easily integrated across the enterprise.

The microinsurance space is in need of better customer-first policies that are both convenient and flexible to use. ‘On & Off’ microinsurance policies for farmers, especially when they need it, can bring about a change in their buying behavior. The freedom to turn your insurance protection off, when you are not likely to use or benefit from it can give customers the freedom to use a product that maximizes their utility.

At the same time, insurers will be able to diffuse their products with greater spread across the rural landscape because the customer is able to derive greater value from it.

#3 Easy and Customer-friendly Claims

Consumers want faster reimbursements against their plans. Going with the traditional process, claim settlement may take several months to approve. Through distributed ledgers and guided access, documents or information can be made available in a fraction of seconds.

MaxBupa, in association with Mobikwik, has introduced HospiCash, a microinsurance policy in the health domain. It has identified the low-income segment’s needs and accordingly takes cares of out of pocket expenses (@ ₹500/day) of the customers.

Mobikwik wallet ensures hassle-free everyday money credit to the user.

Another example of easy claim settlement is that of ICICI Lombard motor insurance e-claim service. InstaSpect, a live video inspection feature on the Lombard’s Insure app allows registering claim instantly and helps in getting immediate approvals. It also connects the user to the claim settlement manager for inspecting the damaged vehicle over a video call.

Real-time inspection and claims can benefit farmers. In the event of machine or tractor breakdown, they need not wait for days for the claim inspector to come in-person and assess the vehicle. Instead, using Artificial Intelligence and Machine Learning models, the inspection can be carried out within seconds via an app, following which the algorithm can determine (based on trained models) to approve or reject the claim.

#4 Automating Repetitive Tasks

Entering data manually is subject to human error, whereas, data entered through scanners, document parsers, etc. are up to 99.94% accurate.

Microinsurance sector is also a victim of self-centered human behavior, where agents consider personal profit before the benefit of the user. Automating the customer/agent onboarding journey can improve the distributed sales network model too.

MaxBupa uses FlowMagic for processing inbound documents, for enterprise-wide flexibility and fit. With AI, they are able to halve the manned human effort for gains in operational accuracy.

Automation can bring down the challenges of mis-selling, moral hazard, and distribution costs to level zero with agnostic digital systems.

#5 Operational Efficiency

Where human employment calls for dedicated working hours, with chatbots, a large number of queries can be handled anytime during the day, weekends, and holidays. It is even convenient for customers also.

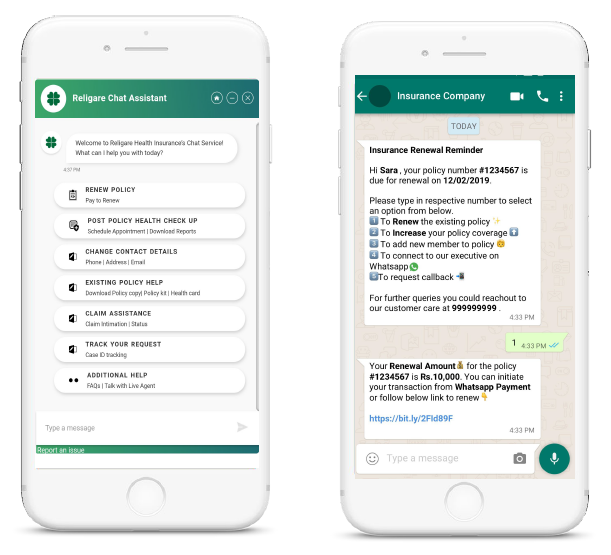

Religare, India’s leading insurance provider has introduced AI-based chatbots that can handle customer queries without needing human intervention. It is capable of helping a customer to buy or renew a policy, schedule appointments, updating contact details, and more. This technology has helped Religare to increase sales by 5X and increase customer interaction by 10X.

The microinsurance sector can also take advantage of chatbot technology to improve response time.

Final Thoughts

As more microinsurance products continue to surface in the market, insurers need to place the rural customer upfront and center of their strategic efforts. By understanding and fulfilling the rural insuree’s needs, cutting down operational costs through process automation such as adding AI-powered chatbots to handle general queries or quickly settling claims without the need for unnecessary human intervention — microinsurers can realize better market penetration and adoption for these policies.

Knowledge thats worth delivered in your inbox