In the world of software development, the quest for efficiency and quality is relentless. This is where the concept of dora metrics meaning really shines. Rooted in solid research by Google Cloud’s DevOps Research and Assessments (DORA) team, these metrics are gleaned from a deep dive into the practices of over 31,000 engineering professionals. So, what’s the big deal about dora metrics 2023? Well, they’re not just a set of numbers; they’re a roadmap to mastering the art of software delivery.

Decoding the Core Elements of DORA Metrics

Deployment Frequency

Imagine a tech company frequently updating its app with new features. This rapid deployment is what elite teams achieve, akin to a highly efficient assembly line in a tech factory, constantly rolling out new products. On the flip side, if updates are few and far between, it signals a need for process improvement.

Mean Lead Time for Changes

This is the time it takes from code commitment to production release. In tech terms, it’s like the duration between a software’s beta version and its official launch. The faster this process, the more agile and responsive the team is. Elite groups achieve this in less than an hour – a testament to their streamlined workflows.

Change Failure Rate

This metric assesses the frequency of failures or bugs post-deployment. It’s like measuring the error rate in a newly released software version. The goal is to minimize this rate, ensuring that most updates enhance rather than hinder user experience.

Time to Restore Service

This measures the speed at which a team can resolve a critical issue. Think of a major app crashing and the team’s efficiency in getting it back online. The quicker the restoration, the more resilient and capable the team.

Measuring DevOps Performance with DORA Metrics

Measuring DevOps performance using dora metrics involves tracking key aspects of software delivery and maintenance. These metrics provide insights into how effectively a team develops, delivers, and sustains software quality.

- Deployment Frequency: This metric gauges how often software is successfully deployed to production. High deployment frequency indicates a team’s ability to rapidly deliver updates and features, akin to a well-oiled machine consistently producing quality output.

- Mean Lead Time for Changes: It measures the time taken from code commitment to production deployment. Shorter lead times suggest a team’s agility in incorporating changes and improvements, reflecting a streamlined and efficient development pipeline.

- Change Failure Rate: This metric assesses the proportion of deployments causing failures or issues. A lower rate is indicative of robust and reliable deployment processes, highlighting a team’s proficiency in minimizing disruptions from new releases.

- Time to Restore Service: It measures how quickly a team can recover from a service outage or incident. Faster recovery times demonstrate a team’s resilience and capability in maintaining continuous service availability and quality.

Best Practices for Implementing DORA Metrics

Implementing dora metrics effectively can significantly enhance a team’s software development and delivery processes. Let’s explore some best practices to make the most out of these metrics.

- Automating Data Collection: Automation is crucial. To get accurate dora metrics calculation, data needs to be gathered seamlessly from various systems like version control, issue tracking, and monitoring systems. This automation ensures data integrity and saves valuable time.

- Setting Targets and Tracking Progress: Establish clear goals for each of the metrics. For instance, aim to improve your Deployment Frequency from monthly to weekly. Regularly tracking these metrics helps in assessing progress and identifying areas needing attention.

- Effective Communication of Metrics: Share the findings and progress of dora metrics within the organization. This transparency fosters a culture of continuous improvement and collective responsibility.

Factors Impacting DORA Metrics

Understanding the factors that influence dora metrics is crucial for improvement.

- Deployment Frequency: Bottlenecks here can include waiting for approvals or a lack of automated testing. Streamlining and automating the deployment pipeline are key to enhancing this metric.

- Mean Lead Time for Changes: Delays can occur due to lengthy code reviews or changing requirements. Improving the efficiency of development processes and maintaining clear, stable requirements can significantly reduce lead time.

- Change Failure Rate: A high rate often points to systemic issues like inadequate testing or poor environment simulation. Enhancing test coverage and environment parity can help lower this rate.

- Time to Restore Service: Slow recovery can be due to inadequate incident management processes or poor system observability. Implementing effective incident response protocols and improving system monitoring can expedite recovery times.

Balancing DORA Metrics for Optimal Performance

Achieving optimal performance in DevOps requires a balanced approach to dora metrics. This balance is key to ensuring both rapid delivery and high-quality software.

- Harmonizing Speed and Stability: Striking a balance between deployment frequency and change failure rate is crucial. High deployment frequency should not compromise the stability of the software, ensuring that new updates enhance rather than disrupt the user experience.

- Optimizing Lead Time and Recovery: Balancing mean lead time for changes with time to restore service ensures that quick changes don’t lead to prolonged downtimes. It’s about being quick yet careful, ensuring that speed does not sacrifice service quality.

- Continuous Improvement: Regularly reviewing these metrics helps in identifying areas for improvement. Teams should aim for constant enhancement in all metrics, aligning their strategies with evolving dora metrics 2023 standards for sustained excellence.

Expanding Beyond DORA Metrics: A Comprehensive Performance View

While dora metrics offer valuable insights into specific aspects of DevOps performance, it’s essential to complement them with other metrics for a well-rounded evaluation.

- Code Quality Metrics: These metrics, like Lines of Code (LOC) and code complexity, ensure consistency and maintainability of code. They are crucial in evaluating the technical health of software.

- Productivity Metrics: These metrics assess the team’s efficiency. They include velocity, cycle time, and lead time, providing insights into how effectively the team is working towards project goals.

- Test Metrics: Metrics such as code coverage and automated tests percentage gauge the thoroughness of testing, crucial for ensuring software quality and reliability.

- Operational Metrics: These include Mean Time Between Failures (MTBF) and Mean Time to Recover (MTTR), offering insights into the software’s stability in production and maintenance efficacy.

- Customer Satisfaction Metrics: Understanding user satisfaction with the software is key. These metrics help gauge user engagement and satisfaction, reflecting the software’s real-world impact.

- Business Metrics: Metrics like customer acquisition rate, churn rate, and monthly recurring revenue (MRR) provide a business perspective, linking software performance to business outcomes.

- Application Performance Metrics: These track the software application’s performance, including aspects like availability, reliability, and responsiveness, ensuring that the software meets end-user expectations.

DORA Metrics vs. Other Performance Metrics: Finding the Balance

While dora metrics are crucial for DevOps efficiency, they should be complemented with other performance metrics for a holistic view.

- Beyond Efficiency: While dora metrics focus on operational efficiency, other metrics cover areas like code quality and team productivity. Integrating these metrics provides a more comprehensive picture of a team’s overall performance.

- User-Centric Metrics: Including metrics that reflect user satisfaction and engagement offers insights into how the software is received by its end-users. This focus ensures that efficiency gains translate into real-world value.

- Business Alignment: Combining dora metrics with business-focused metrics aligns software development with organizational goals. This alignment ensures that technical improvements contribute to broader business objectives, making DevOps an integral part of the company’s success.

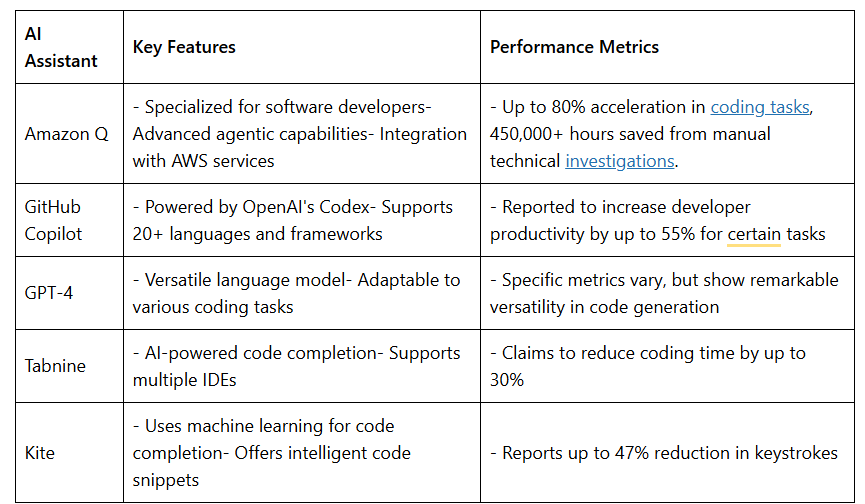

Tools for Tracking and Optimizing Performance Metrics

To effectively track and optimize performance metrics, leveraging the right tools is essential. These tools facilitate accurate data collection, analysis, and visualization, enhancing the understanding and application of dora metrics.

- LinearB: Offers a comprehensive dashboard for dora metrics, integrating with various git and project management tools for real-time data analysis and insights.

- Cortex: A robust dora metrics tool that provides detailed insights, helping teams understand and refine their development and operational processes for better outcomes.

- CTO.ai: Simplifies the tracking of dora metrics, allowing teams to monitor and analyze their DevOps performance efficiently.

- Faros: This engineering metrics tracker captures the four key dora metrics, presenting them in an easily digestible format for teams to assess their performance.

- Haystack: Known for its user-friendly interface, Haystack tracks dora metrics effectively, offering actionable insights for performance enhancement.

- Sleuth: A popular choice for tracking dora metrics, Sleuth offers detailed analytics that help teams in optimizing their software delivery processes.

- Velocity by Code Climate: This tool specializes in tracking the four key dora metrics, providing teams with a clear view of their software delivery efficiency.

- DevLake: An open-source option, part of the Apache ecosystem, DevLake is a data lake and analytics platform that allows for quick implementation of dora metrics for benchmarking projects and teams.

The implementation and optimization of dora metrics stand as a cornerstone in the realm of DevOps, offering a clear, data-driven pathway to enhance software development and delivery processes. By utilizing a mix of sophisticated tracking tools like LinearB, Cortex, and DevLake, teams can not only monitor but also significantly improve their performance across key dimensions.

Whether it’s about speeding up deployments, enhancing code quality, or ensuring user satisfaction, dora metrics provide a comprehensive framework for continuous improvement. Embracing these metrics, along with a balanced approach incorporating other performance indicators, empowers organizations to not just keep pace with the evolving technological landscape but to excel in it, aligning their DevOps practices with the ever-growing demands and standards of the software industry.

FAQs on DORA Metrics

How do DORA metrics help in improving team collaboration in DevOps?

DORA metrics foster collaboration by providing clear, objective data that teams can collectively work towards improving. This shared focus on metrics like deployment frequency and change failure rate encourages cross-functional teamwork and communication.

Can small organizations or startups benefit from implementing DORA metrics?

Absolutely, startups and small organizations can greatly benefit from dora metrics as they provide a framework to scale their DevOps practices efficiently. These metrics help in identifying bottlenecks and optimizing processes, which is crucial for growth.

Are there any common challenges when first implementing DORA metrics?

Initially, teams might face challenges in data collection and analysis for dora metrics calculation. Integrating tools and ensuring accurate, consistent data collection can be complex but is essential for reliable metric tracking.

How often should DORA metrics be reviewed for effective performance management?

Regular review, ideally monthly or quarterly, is recommended to effectively manage performance using dora metrics. This frequency allows teams to respond to trends and make timely improvements.

Can DORA metrics be used alongside Agile methodologies?

Yes, dora metrics can be seamlessly integrated with Agile methodologies. They complement Agile by providing quantitative feedback on delivery practices, enhancing continuous improvement in Agile teams.

Knowledge thats worth delivered in your inbox