So far, there were three most talked about recruitment metrics — time-to-hire, cost-per-hire, and retention rate. Due to the Covid-19 outbreak, the HR industry is facing another challenge of managing and interacting with the remote workforce.

The impact of Covid-19 will be felt beyond 6 months. Organizations are, therefore, keen on revising their HR processes. Apart from hiring and retaining talents, productivity remains a crucial concern for most employers.

Over 70% of organizations are opting for virtual recruitment methods and technologies like Artificial Intelligence, Robotic Process Automation and Machine Learning are leading this change. HR Chatbots are a well-known implementation of AI technology in recruitment.

5 Important AI-powered HR Chatbots Use Cases

AI-powered HR bots can streamline and personalize recruitment and engagement processes across contract, full-time, and remote workforce.

1. Screening Candidates

Almost 50% of talent acquisition professionals consider screening candidates as their biggest challenge. Absence of standardized assessment process, lack of appropriate feedback metrics, overdependence on employment portals, and ignoring the pool of interested candidates are some of the factors that create bottlenecks in the recruitment process.

Finding the best fit for the organization is in itself a challenge. On top of that, the time lost in screening the ‘ideal candidate’ leads to losing the candidate altogether. Nearly 60% of recruiters say that they regularly lose candidates before even scheduling an interview.

AI can help in making the screening process more efficient. From collecting resumes to scanning candidates’ social & professional profiles, recent activities, and their interest in the industry/organization, AI can connect the dots and shortlist ‘best candidates’ from the talent pool. The journey begins with an HR bot that collects resumes and initiates basic conversations with the candidates.

HR operations chatbot – View Demo

2. Scheduling Interviews

The biggest challenge with scheduling interviews is finding a time that works for everyone.

According to a recent HR survey by Yello, it takes between 30 minutes and 2 hours to schedule a single interview. Nearly 33% of recruiters find scheduling interviews a barrier to improving time-to-hire.

The barriers to scheduling interviews involve time zones, prior appointments, location, and commute. AI-powered chatbots can piece it together for both — candidates and interviewers and propose an ideal time in seconds. Moreover, today’s HR bots can handle reimbursements, feedback, notifications, and post-interview sentiments of the candidates.

Appointment scheduling chatbot – View Demo

3. Applicants Tracking

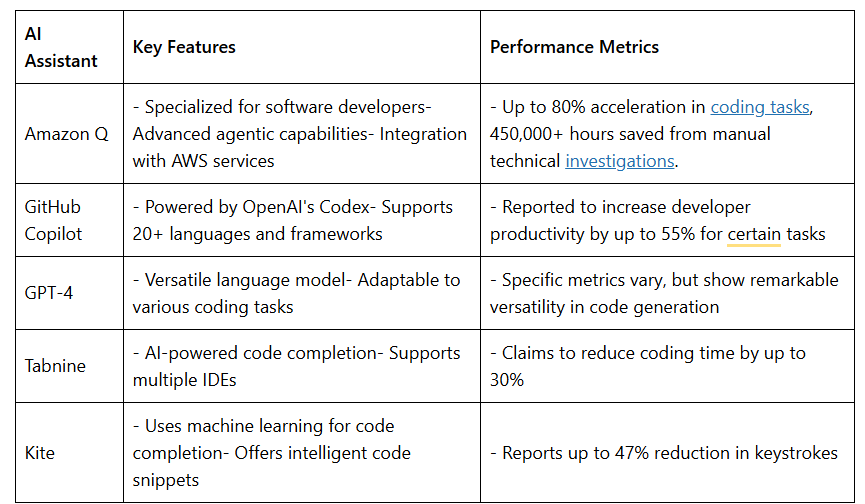

Many organizations have been using Applicants Tracking Systems (ATS) — a software for handling recruitment and hiring needs. ATS provides a central location and database of resume boards (employment sites).

HR chatbots with NLP capabilities can be integrated into ATS to facilitate intelligent guided semantic search capabilities.

4. Employee Engagement

Even after the orientation, employees (especially new joiners) face hurdles in keeping up with the organization’s procedures. Reaching out to HRs is the solution, but they’re also bound by time. In most of the situations, peer-support is a way through for activities like using time-sheets, leaves, holidays, reimbursements, etc.

Chatbots have always been great self-service portals. HR departments can leverage bots to answer FAQs on the company’s policies, employee training, benefits enrollment, self-assessment/reviews, votes, and company-wide polls.

HR bots with NLP capabilities can converse with employees, understand their sentiments, and offer resolutions. 89% of HR professionals believe that ongoing peer feedback and check-ins are key for successful outcomes. Especially in large enterprises, HR chatbots can engage with employees at scale. Moreover, chatbot conversations provide actual data for future analysis. This will also help the upper management with an unbiased understanding of the sentiments at the bottom of the pyramid.

5. Transparency across Teams

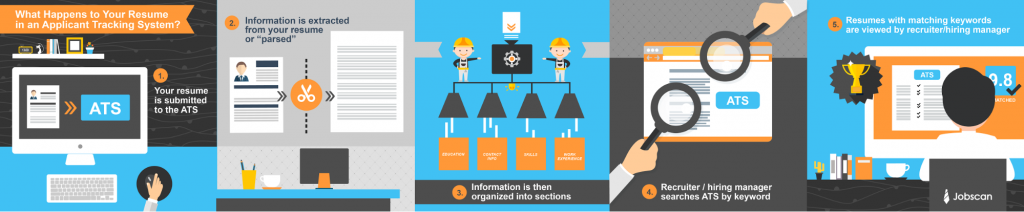

Recruiting data is often siloed and confined with the recruiters themselves. Leadership only has a high-level understanding of recruitment at ground levels. Often, this data is not available to other members of the HR department as well. Less than 25% of companies make recruiting data available to the entire HR team.

One of the reasons for lack of information transparency is the use of legacy systems like emails, spreadsheets, etc. for generating reports and sharing updates.

With AI-powered systems, controlled sharing of data, dynamic dashboards, real-time analytics, and task delegation with detailed information can be simplified. AI-chatbots, integrated within HRMs can make inter/intra departmental conversations and information requests simpler.

Final Thoughts

Today, recruiters prefer technology-based solutions to make their hiring process more efficient, increase productivity and candidate’s experiences. Tools like conversational chatbots are becoming increasingly popular because of the intuitive experiences they deliver. Chatbots can simplify HR operations to a greater extent and at the same time provide better employee engagement rates than humans.

Multilingual AI-powered HR Chatbot with Video – Hitee.chat

Knowledge thats worth delivered in your inbox